Rearchitecting Apache Pulsar to handle 100 million topics, Part 4

Tags: pulsar-ng- Context

- Project “Apache Pulsar NextGen”

- Topic lookup and assignment flow - components and interaction

- Summary

Context

Welcome to continue to read about the possible design for rearchitecting Apache Pulsar to handle 100 million topics.

The previous blog post explains the context.

Project “Apache Pulsar NextGen”

Rearchitecting Apache Pulsar to handle 100 million topics is one of the goals of the next generation architecture for Apache Pulsar. This architecture is unofficial and it hasn’t been approved or decided by the Apache Pulsar project. Before the project can decide, it is necessary to demonstrate that the suggested changes in the architecture are meaningful and address the problems that have become limiting factors for the future development of Apache Pulsar.

Summary of goals

- introduce a high level architecture with a sharding model that scales from 1 to 100 million topics and beyond.

- address the micro-outages issue with Pulsar topics. This was explained in the blog post “Pulsar’s high availability promise and its blind spot”.

- provide a solid foundation for Pulsar future development by getting rid of the current namespace bundle centric design which causes unnecessary limitations and complexity to any improvements in Pulsar load balancing.

The way forward

The previous blog post explained the next milestone, the minimal implementation to integrate the new metadata layer to Pulsar. This blog post will continue with the detailed design that is necessary to get the implementation going.

Topic lookup and assignment flow - components and interaction

The plan for the first steps in the implementation is to restore pulsar-discovery-service (already in progress in develop branch) and then start modifying the solution where we migrate towards the new architecture.

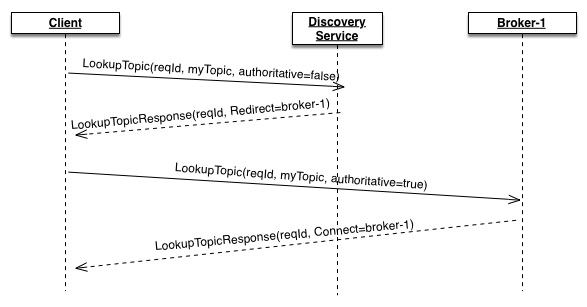

As explained in the previous blog post, a Pulsar client will connect to the discovery service for lookup.

This is a point where we will start the “walking skeleton” by keeping the solution “working” at least for the “happy path” of Pulsar consumers and producers. In the new architecture, the topic lookup will trigger the topic assignment. That is the reason why it’s useful to call the end-to-end flow as “topic lookup and assignment flow”.

Components participating in the topic lookup and assignment flow

There was a draft listing of possible components in one of the previous blog posts:

- topic shard inventory

- tenant shard inventory

- namespace shard inventory

- topic controller

- cluster load manager

- broker load manager

This could be a starting point for describing the components and the roles.

Component communications

In the architecture, there’s a need for communication from each sharded component to components of other shards. The initial plan is to use either gRPC or RSocket protocol for implementing this communication.

The discovery of other shards is via the “cluster manager” component. That provides services for service discovery.

The current assumption is that each shard is forms a Raft group and all components are sharded across these shards. The cluster manager would form it’s own separate Raft group.

Whenever the leader of the shard gets assigned, it will connect to the cluster manager and update the leader’s endpoint address. All nodes of all shards will keep a connection to the cluster manager and get informed of the shard leader changes.

The endpoint that is registered is a gRPC or a RSocket endpoint address. There will be a way to send messages to a sub-component that is contained in the shard. Each shard is expected to be uniform and consistent hashing is used to locate a specific key. This key will be the tenant name, namespace name or topic name in the case of tenant inventory, namespace inventory or topic inventory components. The topic name is also the key for the topic controller.

Summary

This blog post describes the components and interactions participating in the topic lookup and assignment flow.

There are a lot of small details that will need to be addressed on the way to implementing this solution.

Each sub-component interaction procotol will be specified in detail after there’s some feedback from proof-of-concept implementation that is ongoing.

Related Posts

- Scalable distributed system principles in the context of redesigning Apache Pulsar's metadata solution

- Rearchitecting Apache Pulsar to handle 100 million topics, Part 3

- Rearchitecting Apache Pulsar to handle 100 million topics, Part 2

- Rearchitecting Apache Pulsar to handle 100 million topics, Part 1

- A critical view of Pulsar's Metadata store abstraction

- Pulsar's namespace bundle centric architecture limits future options

- Pulsar's high availability promise and its blind spot

- How do you define high availability in your event driven system?

- Correction: This blog is not about Apache Pulsar 3.0

- Possible worlds for Apache Pulsar 3.0 and making that actual